Inference Architecture MLOps Pattern for Large Language Models in the cloud

Licence: CC BY-NC-ND 4.0

Initially publish on this blog

MLOps : Inference Architecture Pattern

Context

Natural Language Processing (NLP) are dedicated AI application that works on text. This application use Large Language Model (LLM) with billions of parameters build with Deep Learning technics. To compute efficiently a vector representation of text, this model must be loaded and executed in Graphical Processing Unit (GPU) most of the time.

This GPU card are expensive, more that 1 euro per hour on AWS Cloud and LLM not optimized most of the time.

Basic Architecture

In a simple architecture, you deploy your application on a GPU Server.

Your application is, most of the time, composed by 3 components:

- A User Interface : a Web page interface build with Gradio or StreamLit

- A Data Preparation step: where you will convert your input data into a vector with differents operations like remove the stop work, put in lowercase, merge with a external data source etc….

- A n Inference Computing task: where you submit your text to a LLM to retrieve a vector

- Again Data Preparation this time to format and sort the results, compute Nearest Neighbors for example and translate it in text, score, link, etc … for the User Interface layer and present the result

Drawbacks

Resources usage are not optimal in that case since you pay most of the time an expensive GPU Server for each application and not on demand.

An alternative is to migrate on new product like Banana Dev where you can use GPU on demand like Lambda function on AWS.

But if you must stay in an AWS environment, you can distribute your applications functions as follow to reduce the cost optimize your resources application. Obviously, It will demand more work for your developers to split the application.

Optimized Architecture

In that architecture, the User_Interface is hosted on a AWS EC2 T2 nano server, it will cost only 5 euros per month to display your web application.

The data preparation is a costly operation but hosted on AWS Lambda function you pay only on demand, request per request, no more.

NVidia Triton Inference Server is the keystone of the architecture. It hosts your LLM in an real efficient way and is reachable via HTTP or gRPC. Your inference will be computed in few milliseconds.

Of course, If you deploy only one application, the amount of your bill is more ore less the same. This pattern make sens when you deploy several applications.

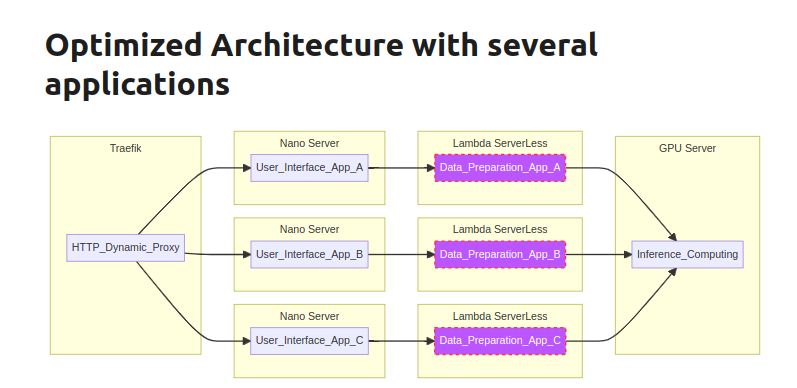

Optimized Architecture with several applications

If you’re hosting multiple application, nano server cost are negligible and data preparation steps are already completely optimize, you pay on demand. Of course, you still have a GPU Server but only one that time.

Technical implementation notes

Triton Inference Server

Optimize and convert an Hugging Face model

Thanks to the Transformer deploy we convert the model as follows:

docker run -it --rm --gpus all \

-v $PWD:/project ghcr.io/els-rd/transformer-deploy:0.5.3 \

bash -c "cd /project && \

convert_model -m \"philschmid/MiniLM-L6-H384-uncased-sst2\" \

--backend tensorrt onnx \

--seq-len 16 128 128"

The output may be bugged check the size of the vector, check the configuration file, example

models_repository/all-MiniLM-L6-v2_model/config.pbtxt

output {

name: "output"

data_type: TYPE_FP32

dims: [-1, 384]

}

Here 384 replaced -1

Launch Triton server

Here we forward the HTTP port on 9000

docker run -it --rm --gpus all -p9000:8000 -p9001:8001 -p9002:8002 \

--network=dev \

--shm-size 128g \

--name triton \

-v $PWD/models_repository:/models \

nvcr.io/nvidia/tritonserver:22.07-py3 \

bash -c "pip install transformers && tritonserver --model-repository=/models" \

2>&1 | tee triton.log

Function As A Service : Lambda AWS

Build the function container

If you choose to deploy it via a container, your Dockerfile must looks like something like that :

FROM public.ecr.aws/lambda/python:3.8

COPY requirements.txt .

RUN pip3 install -r requirements.txt --target "${LAMBDA_TASK_ROOT}"

WORKDIR /var/task

COPY lambda.py ${LAMBDA_TASK_ROOT}

COPY inference.py .

COPY query.py .

ADD embeddings /var/task/embeddings

CMD [ "lambda.handler" ]

In that example, we add the embeddings and other python modules.

Here is the python code of the handler where we call the get_results function to parse the event

import logging

from query import get_results

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def handler(event, context):

logger.info('Event: %s', event)

return list(get_results(event['query']))

To test it run the container and the following command :

curl -XPOST "http://localhost:9999/2015-03-31/functions/function/invocations" -d '{"query":"When norway decided to extend its fishery border ?"}'

Deploy the function container

- push the docker image, on AWS ECR repository after creation

- configure the VPC of the lambda function and maybe create a Security Group, in our example we must allow port 9000.

- add a variable environment, in our example, the python code reach the triton server via an environment variable :

TRITON_SERVER_URL

Create an API Gateway

The lambda function will be triggered by an HTTP request via the API Gateway

- Create a resources

/and methodPOST - Integration request : Integration type Lambda

- Mapping Templates / Request body passthrough / Never / Content-Type : application/json and this template

$input.json('$') - Method Response / Method: application/json => Empty

Configure API

Create a VPC Endpoint dedicated.

Resource Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "arn:aws:execute-api:eu-XXX.../{{stageNameOrWildcard}}/{{httpVerbOrWildcard}}/{{resourcePathOrWildcard}}",

"Condition": {

"StringNotEquals": {

"aws:sourceVpce": "vpce-XXXXX"

}

}

},

{

"Effect": "Allow",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "arn:aws:execute-api:eu-XXX.../prod/POST/"

}

]

}

Deploy API

Deploy on stage prod for example and keep a trace of the URL, for example :

Invoke URL: https://XXXX.execute-api.eu-XXX.amazonaws.com/prod

Web Interface

As any other traditional app, you will deploy your interface as follow

FROM python:3.9-slim

EXPOSE 8501

WORKDIR /app

RUN apt-get update && apt-get install -y \

build-essential \

software-properties-common \

git \

&& rm -rf /var/lib/apt/lists/*

ADD requirements.txt /app/requirements.txt

RUN pip3 install -r requirements.txt

COPY streamlit_app.py .

COPY style.css .

ENTRYPOINT ["streamlit", "run", "streamlit_app.py", "--server.port=8080", "--server.address=0.0.0.0"]

The content of the application will stay very simple :

import os

import time

import requests

import streamlit as st

with open("style.css") as css:

st.markdown(f'<style>{css.read()}</style>', unsafe_allow_html=True)

st.title('XXX Search Engine [beta]')

query = "What's is the Ethiopia ranks in terms of press freedom"

st.subheader("Query: ")

query = st.text_input('', 'Who is the leader of opposition party in Venezuela ?')

api_url = os.environ.get("TT_API_URL", "http://localhost:9999/2015-03-31/functions/function/invocations")

start = time.time()

results = requests.post(api_url,headers={"Content-Type": "application/json"}, json={"query": query}).json()

end = time.time()

for i, r in enumerate(results):

print(f"Result: ({i} | score {r['score']})")

Build the docker image:

docker build -t app-XXX:latest -f Dockerfile.streamlit .

And run it

docker run \

--restart=always \

-e TT_API_URL=https://XXXXXXXX.eu-XX.amazonaws.com/prod/ \

-p 8080:8080 \

app-XXX

Contact : https://foss4.eu/

Made with ❤️ from Brussels 🇪🇺

Sebastien Campion